Matrix board performance measurements¶

| authors: | Joost Baars |

|---|---|

| date: | June 2020 |

Description¶

The matrix board performance measurements mainly measure the connect and disconnect duration of various QoS configurations. By comparing different QoS policies with the connect and disconnect duration, the overhead of the QoS policy can be visualized. The Quality of Service (QoS) policies are a set of configurable parameters that control the behavior of a DDS system (how and when data is distributed between applications). Some of the parameters can alter the resource consumption, fault tolerance, or the reliability of the communication.

Each entity (reader, writer, publisher, subscriber, topic, and participant) of DDS has associated QoS policies to it. Some policies are only for one entity, others can be used on multiple entities.

Measurement 1¶

The performance measurements application was executed with 50 measurements for each configuration. 15 different custom configurations were executed in these tests.

Setup¶

The setup consists of two Raspberry Pi’s with each a clean install of Raspbian Buster Lite 2020-03-13. The Raspberry Pi’s that are used are the Raspberry Pi 2 model B and a Raspberry Pi 3 model B.

These Raspberry Pi’s only have the necessary dependencies installed. Both Raspberry Pi’s ran PTP in the background for time synchronization.

The setup can be found in the image below. All devices are connected using ethernet cables (CAT6 cables were used).

![Internet <--> [Router]

[Router] <-left-> [Raspberry Pi 2 model B] : 2m ethernet

[Router] <-right-> [Raspberry Pi 3 model B] : 2m ethernet

[Router] <--> [Laptop] : SSH, 2m ethernet](../../../../_images/plantuml-17ee058fb1ef52d77ed7a03f37655b0aa1cff78d.png)

Each device is connected using ethernet for having less deviation in the

communication. Additionally, this results in better accuracy for PTP

(time synchronization).

Measured QoS policies¶

Every QoS policy in this list has the same configuration as the default

configuration. With only one parameter changed. The QoS policy is changed for

the writer and the reader. It is chosen to have the same QoS policies active on

the writer and the reader to avoid any QoS incompatibilities.

More information about the durability types can be found here: Durability.

By default, CycloneDDS has some QoS policies enabled/configured. These are described in Cyclone DDS default QoS policies.

Cyclone DDS default QoS policies¶

The default QoS policies that are already enabled by Cyclone DDS are the following:

The default QoS policies enabled for the writer:

The default QoS policies enabled for the reader:

These QoS policies are found from the source code of Cyclone DDS. The file can

be found in Default QoS policies Cyclone DDS. The functions

ddsi_xqos_init_default_reader and ddsi_xqos_init_default_writer contain

the default QoS policies for the reader and the writer.

Default¶

Liveliness configured as manual by the participant (the participant must send a message manually within a certain timeframe). The liveliness time is configured as 2500 milliseconds. The reliability is reliable with a maximum blocking time of 10 seconds.

Durability¶

The durability configures if messages must be stored. And if so, where they are stored.

The durability set in the QoS policy is the name of the measurement. With

durability_transient, durability transient is enabled in the durability

QoS policy.

More information about this QoS policy can be found here: Durability.

When there is service in the name, there is an additional configuration.

Durability transient service¶

More settings can be configured for the durability with the durability service

QoS policy.

In the two tests with the durability service, only the cleanup delay was

changed (how long information must be kept regarding an instance). The history

policy was set to KEEP ALL. The settings for the resource limits within this

service were all set to 25 messages/instances and samples per instance,

The durability_transient_service_long is configured with a time of 4 seconds.

The durability_transient_service_short is configured with a time of 10 milliseconds.

More information about this QoS policy can be found here: Durability service

Deadline¶

The deadline can be configured to set a requirement for the message frequency of the writer. If a writer does not send a new message within the deadline time, the reader is notified. When there is a backup writer, the reader automatically connects to the backup writer.

The long_deadline is configured with 4.5 seconds. The short_deadline is

configured with 1.1 seconds. The short_deadline is above 1 second because

that is the interval in which the matrix board sends it’s sensor data. If it

would be below 1 second, the matrix board would have exceeded the deadline.

Lifespan¶

The lifespan can be configured to set how long messages can still be sent to new readers. So a newly connected reader can read already sent messages with the lifespan configured.

The long_lifespan is configured with 4 seconds. The short_lifespan is

configured with 50 milliseconds.

Liveliness¶

The liveliness can be configured to be able to know if writers/readers are still alive on a topic. The liveliness can be automatically managed by DDS (by pinging the topic once in a while). It can also be configured manually if you already sent messages within the maximum liveliness.

The ‘’short_liveliness`` is configured to automatically send messages

(liveliness_automatic). The time is set to 50 milliseconds. The

long_liveliness is configured to automatically send messages. The time is

set to 4000 milliseconds. Four seconds has been chosen because this is under the

liveliness of the Active Matrixboards topic of five seconds. The

manual_liveliness is configured to base the liveliness on the topic. Messages

must be sent manually (liveliness_manual_by_topic). The default

configuration is configured to base the liveliness on the participant. Messages

must also be sent manually in this configuration (liveliness_manual_by_participant).

Reliability¶

The reliability can be configured to make sure that messages are received

correctly by the reader. Reliability reliable makes sure that messages are

received correctly. If something goes wrong in the communication, the message

is resent.

The reliable application uses reliability reliable for the QoS policy.

Therefore, messages are checked if they are correctly received. This setting is

the same as the default QoS policy.

The unreliable application uses reliability best effort for the QoS

policy. Therefore, messages are not checked if they are correctly received.

Results measurement 1¶

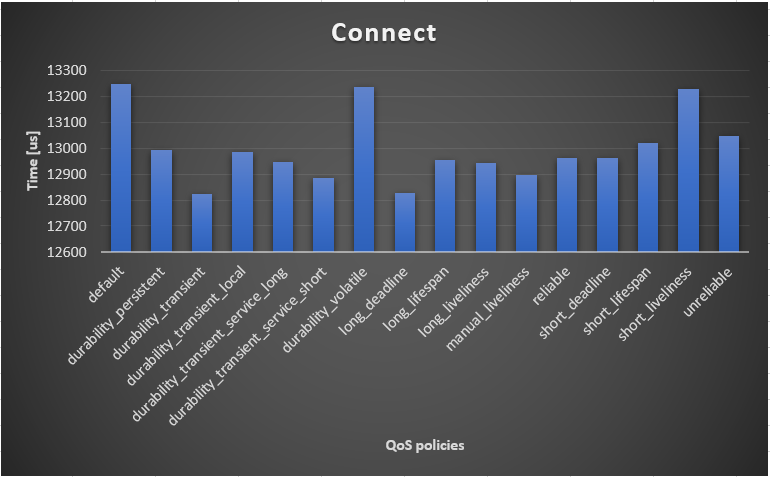

For each custom configuration, the performance measurements were executed 50 times. The average of these 50 measurements can be seen in the graphs in this section.

For each result described below, the deviation is also shown. This deviation

is the difference between the highest value and the lowest value of a

custom configuration. Only one deviation value is shown because this did not

have interesting results (more can be shown if explicitly mentioned). This deviation

is the highest deviation (of the 50 measurements) on the default QoS policy.

The connect durations in the graph below are in microseconds. The default

configuration is exactly the same as the reliable configuration (see Reliability).

Therefore, the longer default configuration is probably caused by being the first

measurement. The average of all the QoS policies lays around 13 milliseconds connect

duration. The durability volatile and the short liveliness QoS policy are a

bit higher compared to the other QoS policies. Based on the durability volatile usage,

the QoS policy should not influence the connect duration. Therefore, the peak of the

durability volatile connect duration is probably due to connect duration fluctuations.

As can be seen below, the maximum measured deviation is high (33% deviation compared to

the average result). This shows that there is quite a lot of fluctuation in the connect

duration.

Maximum deviation: 4321 microseconds

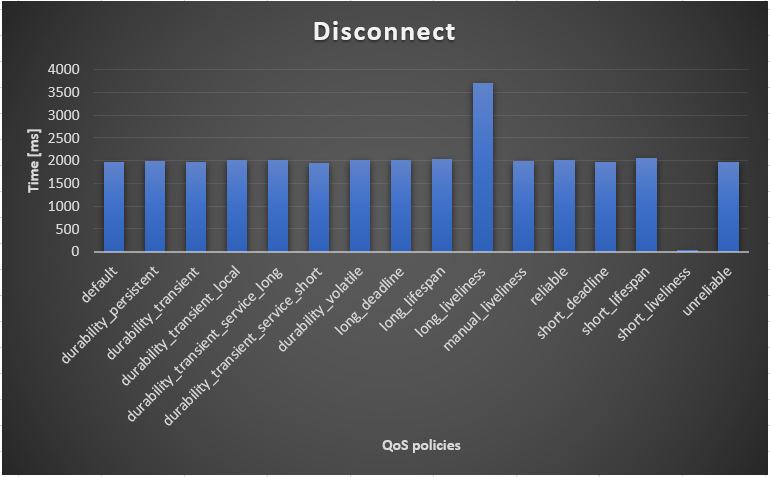

The disconnect duration seems mostly the same for the QoS policies. There are 2

QoS policies that are noticeably different in the graph below. The

long_liveliness QoS policy takes a lot longer compared to the others. This

is because a device is confirmed as “disconnected” after a longer time. The

short_liveliness QoS policy takes a lot shorter for the same reason. The

short_liveliness QoS policy detects a disconnect after it receives no

messages from the device anymore within 50 milliseconds. Therefore, the result

is below 50 milliseconds. The lowest result of the 50 measurements with the

short liveliness was only 9 milliseconds. The longest was 49 milliseconds.

This shows that the QoS policy does its job very effectively (no measurements

equal or above 50 milliseconds). The other QoS policies don’t seem to matter

a lot for the disconnect duration.

Maximum deviation: 960

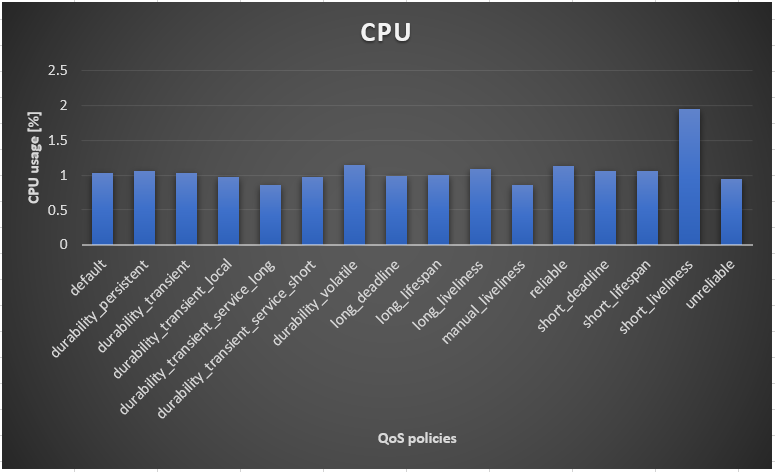

The CPU usage can be seen in the graph below. Most of the average CPU values lay

around the 1%. There is one QoS policy that clearly requires more CPU usage. This

is the short liveliness QoS policy. This is expected for the

short liveliness QoS policy because it must ping to the other devices a lot

more often. The application must ping once within the 50 milliseconds compared to

the long liveliness with a ping within 4 seconds.

Maximum deviation: 2.48%

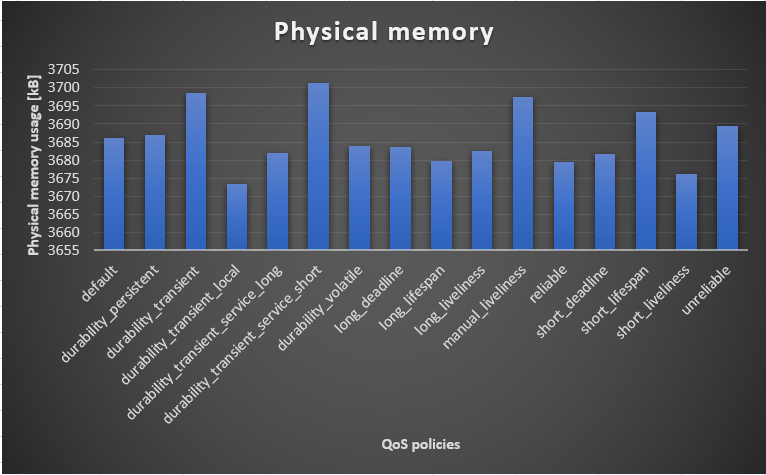

There are no big differences in the physical memory usage.

These measurements were also rather stable with a maximum deviation of fewer

than 150 kilobytes between the QoS policies (4% difference). The

durability transient and durability transient service long QoS policy

seems to use more memory. The manual liveliness and short lifespan also

seem to use a little bit more memory.

As expected, the durability transient QoS policy uses more

memory (old messages are stored using the memory). The

durability transient service short has around the same results compared to the

durability transient QoS policy. The difference between the long and

short variant for the durability transient service is that the short

variant keeps the information regarding an instance for 4 seconds instead of 10

seconds. Possibly, this accounts for the difference in the graph.

Maximum deviation: 256.75 kB

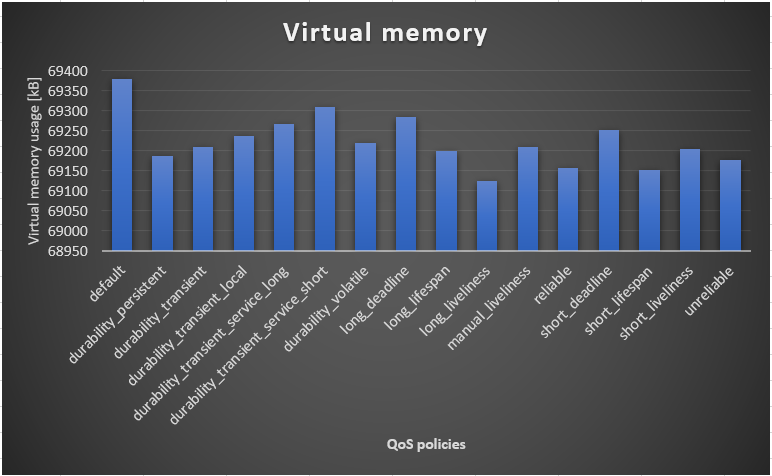

The virtual memory usage was very stable and only differs 400kb maximum between

the QoS policies (<1% difference). The differences could be accountable by the

measure error. The default QoS policy is the same as the reliable QoS

policy. Therefore, the deviation between the measurements seem to be because of

measure inaccuracy instead of QoS policies.

Maximum deviation: 1442.1 kB

Connect duration conclusions¶

The connect duration was mostly close to the 13 milliseconds. This didn’t deviate much between the QoS policies. There was quite a lot of deviation between the connect duration measurements. This could be because short times (milliseconds) are measured and the Raspberry Pi’s run a Linux OS that is not real-time. This OS could negatively influence the deviation between the measurements.

Disconnect duration conclusions¶

The disconnect duration is mostly managed by the liveliness QoS policy. The matrix board application writes a message to its topic every second. Therefore, the liveliness is updated every second (also with the liveliness set to automatic).

The maximum deviation between the disconnect measurements is always less than the configured liveliness. This is also expected, because when the liveliness time is reached, the disconnect duration measurement stops.

When the liveliness was set to 2.5 seconds and the writer writes a message every second, the maximum measured deviation was a little bit less than a second. Also, the results were always between 1.5 seconds and 2.5 seconds. This makes sense because when the liveliness timer reaches 1.5 seconds, a new message is sent by the writer, and the liveliness is reset to 2.5 seconds.

When the automatic liveliness was set to 50 milliseconds, the maximum deviation was measured to be 40 milliseconds! The shortest measured disconnect duration was only 9 milliseconds. That means that the liveliness ping messages are in general executed every 40 milliseconds (with 50 milliseconds liveliness configured).

Resource usage¶

The liveliness automatic QoS policy has a noticeable impact on the CPU usage.

Especially when the liveliness is configured to be short. The

durability transient and durability transient service long seem to have

a small impact on the physical memory usage.

Measurement 2¶

A second measurement was executed to see the scalability difference.

The performance measurements default QoS was executed twice.

Once with 2 devices on the network (master + slave) and the other time with 4

devices on the network (master + 3 slaves).

The measurements for the scalability were not reliable because it was not possible to have the same type of connection for more than 4 devices. The used router only had 4 ethernet ports. More devices could be connected using Wi-Fi, but Wi-Fi is a lot slower and could affect the measurements negatively. Additionally, there were only 4 Raspberry Pi’s. Therefore, if more devices were added, laptops with different OSses had to be used (which could also negatively affect the test).

Setup¶

The setup consists of 4 Raspberry Pi’s with each a clean install of Raspbian Buster Lite 2020-03-13. The Raspberry Pi’s that are used are:

- Raspberry Pi 2 model B

- Raspberry Pi 3 model B

- 2 x Raspberry Pi 3 model B+

These Raspberry Pi’s only have the necessary dependencies installed. All 4 Raspberry Pi’s ran PTP in the background for time synchronization.

The setup can be found in the image below. All devices are connected using ethernet cables.

![Internet <--> [Router]

[Router] <--> [Raspberry Pi 2 model B] : 2m ethernet

[Router] <--> [Raspberry Pi 3 model B] : 2m ethernet

[Router] <--> "2" [Raspberry Pi 3 model B+] : 2m ethernet

[Router] <-right-> [Laptop] : Wifi](../../../../_images/plantuml-c303116f8f9df1ea87bc8066036b0bc92643c998.png)

The only devices connected to this router are the ones shown on the image above.

During the run with only 2 Raspberry Pi’s, both Raspberry Pi 3 model B+’s were used.

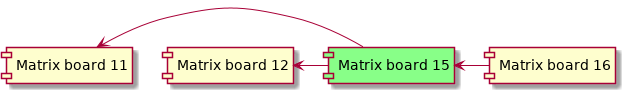

The master had an ID of 15 in the tests.

2 devices test: The only slave had an ID of 11. 4 devices test: The slaves had the ID’s: 11, 12, 16.

Results¶

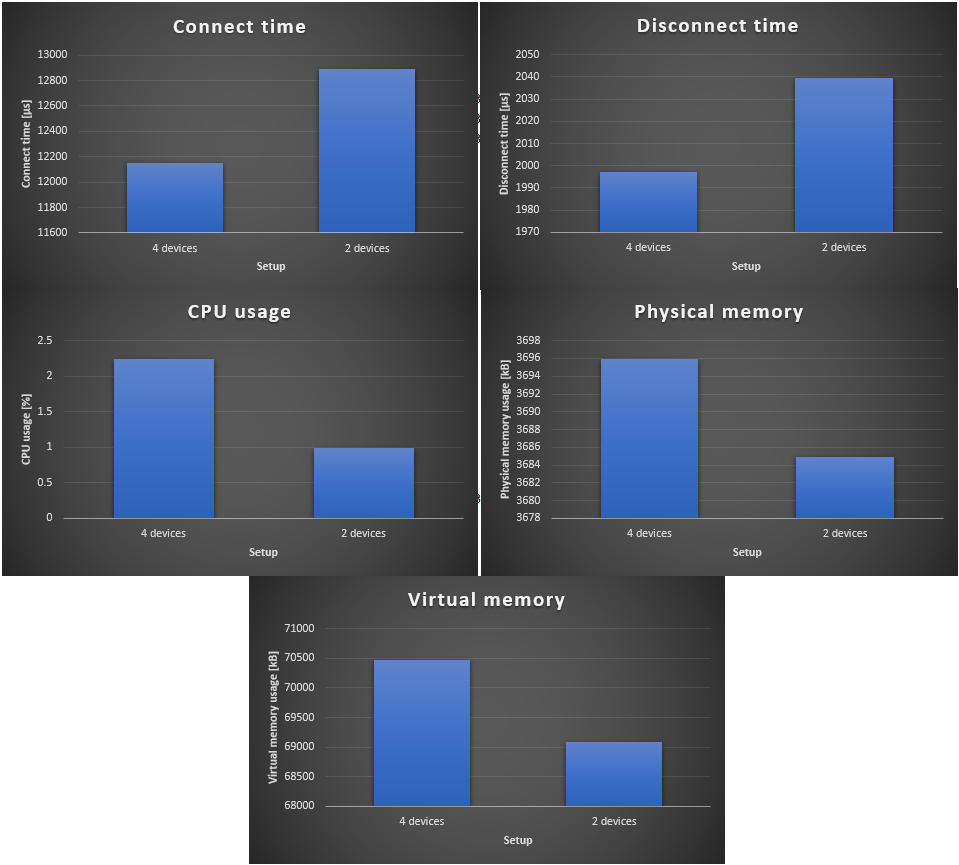

For each setup (2 devices and 4 devices) 50 measurements were executed. These measurements were executed on the default configuration.

The results of these measurements can be seen in the graphs below. This shows that the connect and disconnect times were slightly faster with 4 devices. The difference for the connect time is around 5.6%. More measurements should be executed to see if this difference is accountable by the deviation between the measurements or the number of devices.

For the disconnect duration, there was only a difference of 2%. This is probably due to the deviation between the results. The result of the disconnect duration can be between the 1.5s and 2.5s. Therefore, the average result is expected to be around 2 seconds.

There is a huge difference between the CPU usages. The measurement with 4 devices uses over 2x more CPU on average. As shown in the UML diagrams below, the measurement with 4 devices has two more devices connected to the master. This is expected to use more CPU. This is also probably the reason that the physical and virtual memory usage is a bit higher on the 4 devices measurement.

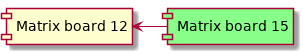

Communication matrix board 15 with 2 devices:

Communication matrix board 15 with 4 devices:

Note

Reliability

The reliability of the test is not high because the results are highly probably accountable by how the matrix board application works instead of the scalability of DDS. This test should be re-executed with a minimal of 5 devices on the network. The master with 2 slaves with an ID below the master should be connected with an ethernet cable. The other devices may be connected using Wi-Fi (the connect and disconnect durations are not affected then). The second test should be executed with >5 devices.